by Patrick Murray

There have been some head-scratching over the seeming differences between results of recent polls from Monmouth, CNN, and Marist-NPR on the debt ceiling. I posted a Twitter thread about this, but here it is unrolled and with some additional context.

If reputable pollsters have very different results on the same topic, first ask yourself this question: Is it an issue that regularly gets talked about where people have formed long-standing clear opinions (e.g. abortion), or is it an issue that – while it may be of crucial importance to the body politic and has been covered by the media – is one where public awareness and sense of salience is actually low (e.g. debt ceilings)? If the latter, then the likely cause of varying poll results is question wording.

Yes, question wording really does matter when public knowledge and engagement is low. And that’s the story behind the different findings on opinion about raising the debt ceiling.

QUESTION: Should raising the debt ceiling be a “clean” process? Here Monmouth & Marist seem to agree, but CNN shows much lower support.

- CNN: 24% “Congress should raise the debt ceiling no matter what.”

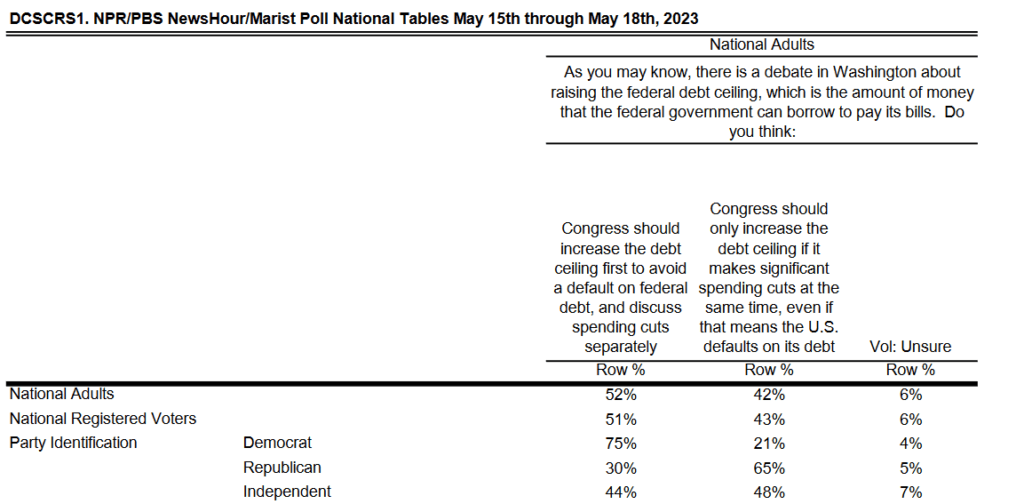

- Marist/NPR: 52% “Congress should increase the debt ceiling first to avoid a default on federal debt, and discuss spending cuts separately.”

- Monmouth: 51% “These two issues [debt ceiling and negotiations over spending on federal programs] should be dealt with separately.”

Or should raising the debt limit be dependent on federal spending cuts? Here, all three polls appear to differ from one another – by a lot!

- CNN: 60% “Congress should only raise the debt ceiling if it cuts spending at the same time.”

- Marist/NPR: 42% “Congress should only increase the debt ceiling if it makes significant spending cuts at the same time, even if that means the U.S. defaults on its debt.”

- Monmouth: 25% “Raising the debt ceiling should be tied to negotiations over spending on federal programs.”

This appears to be a case of the old adage: “Question wording matters most when people know the least.” Some issues are so salient that poll results are impervious to wording variations (e.g. abortion). Others are not – like the debt. Half the public have been paying close attention to the current debate (CNN & Monmouth) and half feel they really understand the consequences of default (Monmouth). This means the true level of “high” knowledge is likely lower. In other words, most Americans are not giving a lot of thought to what they think about the debt ceiling and have not really talked about it until a pollster asks them a question.

Let’s take a look at each poll’s question wording. First, the CNN poll. Note they ask an awareness question with some context information on the issue before asking the debt handling question. Also, the debt question has a third response option (“allow default”).

![CNN. Q23. As you may know, there is a limit to the amount of money the federal government can owe that is sometimes called the "debt ceiling." The Secretary of the Treasury estimates that the government will not have enough money to pay all of its debts and keep all existing government programs running unless Congress raises the debt ceiling by

early June. How closely have you been following the discussions between Joe Biden and Congressional leaders on

the debt ceiling? [RESPONSES ROTATED IN ORDER FOR HALF/IN REVERSE ORDER FOR HALF].

May 17-20, 2023.

NET Closely 50%,

Very closely 14%,

Somewhat closely 36%,

NET Not closely 50%,

Not too closely 28%,

Not closely at all 22%,

No opinion 0%.](https://www.monmouth.edu/polling-institute/wp-content/uploads/sites/22/2023/05/image-2-1024x234.png)

![CNN. Q24. Which comes closest to your view? [RESPONSES ROTATED IN ORDER FOR HALF/IN REVERSE ORDER FOR

HALF].

May 17-20, 2023.

Congress should raise the debt ceiling no matter what 24%.

Congress should only raise the debt ceiling if it cuts spending at the same time 60%.

Congress should not raise the debt

ceiling, and allow the US to default on its debts 15%.

No opinion *](https://www.monmouth.edu/polling-institute/wp-content/uploads/sites/22/2023/05/image-1-1024x239.png)

Next is Marist. They did not ask an opening awareness question nor did they provide a lot of context information.

Finally, here’s Monmouth, which asks a number of questions before the debt handling one. There is some potential framing for the respondent in those intervening questions, although the content is different from CNN’s intro. Also, Monmouth’s is the only poll that provides an explicit “no opinion” option.

![Image. Monmouth University.

5. How much have you read or heard about the recent debate over raising the federal debt ceiling – a lot, a little, or nothing at all?

May 2023

A lot 45%, A little 40%, Nothing at all 15%, (n) (981).

6. How much do you feel you understand what raising, or not raising, the debt ceiling would mean for the U.S. economy? Would you say you understand this a lot, a little, or not at all?

7. Some people say the country will suffer significant economic problems if the debt ceiling is not raised. Do you think these claims are accurate or are they exaggerated, or do you have no opinion on this?

8. Do you approve or disapprove of the way each of the following is handling the debt ceiling issue? [ITEMS WERE ROTATED]

A. President Biden

B. The Republicans in Congress

C. The Democrats in Congress

9. Do you think that raising the debt ceiling should be tied to negotiations over spending on federal programs, or should these two issues be dealt with separately, or do you have no opinion on this?

May 2023.

Negotiations should be tied 25%,

Dealt with separately 51%,

No opinion 24%,

(n) (981).](https://www.monmouth.edu/polling-institute/wp-content/uploads/sites/22/2023/05/image-4.png)

There’s a lot going on here. The big difference between Monmouth and Marist results appears to be the former’s inclusion of the explicit “no opinion” in the question as read. This seems to lessen the number of respondents who select the “tied to spending/cuts” response for Monmouth compared with Marist, which only records “don’t know” as an option if the respondent volunteers they cannot choose between the two stated responses. This also suggests, that the “clean” debt bill option has somewhat more deliberative support than the one that ties it to spending cuts. However, that’s just one possible read of the variance.

The difference between Monmouth/Marist and CNN, though, is a little harder to pin down. Marist’s question specifies that tying the debt to spending cuts may lead to default, which may make that option less attractive than in CNN’s question. CNN includes a separate, third response about allowing default, thus making the consequences of spending cuts less alarming in their question less alarming than in Marist’s. It may also introduce some double-barreled potential (i.e., tapping opinion on more than one issue in the same question). Monmouth’s question does not reference a possible default at all, which means that some respondents may think about that outcome in considering whether to choose the “tie it to spending” option and others may not.

It’s also possible that the big difference with CNN compared to the other two polls is a “3-response” conundrum, where in the absence of fully formed opinion respondents gravitate to the middle option. One thing to note about the CNN question is that polling generally shows majority support for specific federal spending programs. The results to this debt question would seem to contradict those findings. I think the “no matter what” wording in CNN’s clean bill response option and the “damn the torpedoes, let’ go into default” option at the other end of the spectrum may make the middle response option that much more “reasonable” for people regardless of its actual content.

So, what’s my take on the polls? When polling low awareness/salience issues, there are no perfect questions. We end up tapping into somewhat ephemeral public opinion where there are few right or wrongs in question wording. It’s the interpretation of the results we need to be careful about. You cannot assume that poll participants are responding literally to how a question is worded. Therefore, the more questions pollsters ask on these types of issues the better able we are to get a handle on the conditions under which public opinion is malleable.

That also requires folks in the media to actually READ the questions. It’s the first thing I say to journalists when I’m asked about any variations between polls. Many of you may shake your heads and say “Nah, that can’t be it.” But it often is. If only we could take a sample of public opinion like we extract a biological specimen. But we can’t. Measuring how human beings form, express, and act on their attitudes is an inexact science because the very nature of opinion is always in flux.

As to my takeaway on the debt issue? Most Americans don’t have a strong opinion on the best way to raise the ceiling or what that even means in broader terms. And they won’t have one unless there’s a default that impacts them directly. What we do know is that the public sees a partisan debate over the issue. Which means the most likely outcome is that partisan identity will drive who gets blamed for any unhappiness that comes out of a deal.